Conclusion

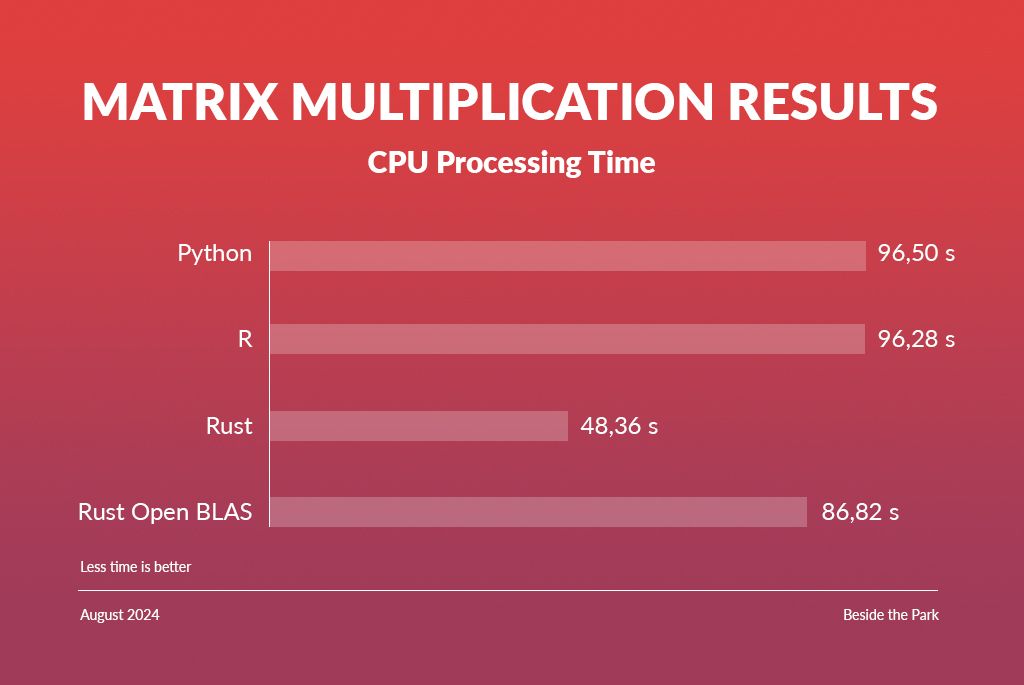

The matrix multiplication test shows that the languages are generally able to achieve similar performance for common operations included in most linear algebra libraries. This is unsurprising, as in these cases, the tested language serves mostly as a wrapper around said libraries.

The implementation of the test was relatively simple in all three languages, though Rust required considerable initial setup to get its library to use the BLAS backend. R and Python/Numpy worked effectively out of the box.

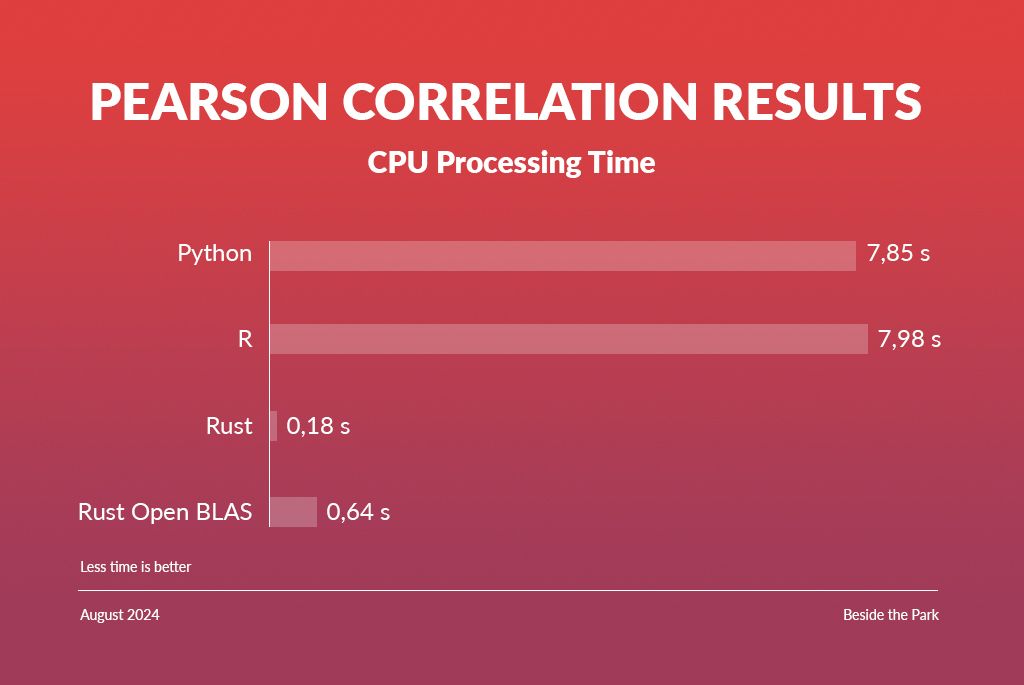

The Pearson Correlation test shows a stark difference between the languages, as Rust outperforms R and Python by over an order of magnitude. This is likely because, unlike in the previous test, the functions provided by the underlying linear algebra libraries could not easily be wrapped to implement the desired result, and large parts of the logic had to be coded in the tested language.

Rust does however have some serious drawbacks in regard to data-science. The community and libraries feel younger and less unified than their Python and R equivalents, with many crates being unstable, and using different underlying structures, making combining their functionalities complicated. Rust's borrow checker and extensive compile time error detection, while in part responsible for Rust's speed and safety, can often slow down prototyping, and is in part responsible for the language's very limited support, which are commonly used in data-science.

Due to the immaturity of Rust’s community libraries in cases where performance is vital, you might want to do more in depth research before using it.

| Python numpy | R | Rust ndarray | Rust ndarray - OpenBLAS | |

|---|---|---|---|---|

| Matrix multiplication | 96.5s | 96.28s | 48.36s | 86.82s |

| Pearson correlation matrix | 7.85s | 7.98s | 0.18s | 0.64s |

Purpose of the research

Data scientists love using Python or R. These are very high level languages designed for boosting software development efficiency. The speed of writing code using these languages encourages experimentation, and testing of various ideas and solutions. These make them great tools for research and concept proving. In many cases however at some point we would like to implement the results of the research into a form of software that works at scale. Ideally we could use the same technology for the production code. Nevertheless, the question is, 'how much performance do we have to sacrifice when using interpreted languages over solutions built in low level compiled languages?' The aim of this research is to answer this question.

As a performance oriented counterpart we were chose between C and Rust. Both are very low-level, compiled languages which don't use a garbage collector for managing memory. Both are considered as the languages that can be used to achieve the best speed. From the performance perspective we expect that both technologies can give very similar results. We chose Rust because apart from the performance benchmark we wanted to examine whether this relatively new technology achieved sufficient maturity to be used in live data science/big data projects.

Why data science projects?

Nowadays big data is quite a popular phrase within the technological field. The idea of 'big data' is to manage and process large volumes of data, while 'data science' means to interpret and analyze data.

Data science projects are crucial and increasingly popular because they drive innovation across various industries and life factors. With the help of statistical models and new machine learning techniques, we can gain very useful insights that can be used to learn what happened, why it happened, and predict what can potentially happen in the future.

As a result, the demand for data science skills and projects continues to rise, making it one of the biggest technological and business related tools today.

Which languages are we considering?

Python

Python is a high-level, programming language that has recently evolved significantly and became one of the most popular ones worldwide. Python supports multiple programming paradigms, including procedural, object-oriented, and functional programming, making it a flexible choice for various applications.

It can be used for a wide range of applications, such as web development, scientific computing, artificial intelligence, data analysis, etc. Its versatility is one of the key reasons for its widespread adoption. Python is very often a preferred technology for data science projects, given its community developed state-of-the-art mathematical, statistical, data science, AI, and data visualization libraries.

R

R is an implementation of the S programming language. Since its inception, R has pretty much become a standard tool among statisticians and data scientists for data analysis and visualization. The "R Project", an open-source initiative, has many followers who continuously contribute to its development and expansion. It excels in statistical computing, offering a lot of statistical techniques such as linear and nonlinear modeling, classical statistical tests, time-series analysis, classification, clustering, etc.

It also provides many powerful tools for data visualization. Packages like ggplot2, lattice, and plotly allow users to create static, dynamic, and interactive graphics. These tools are essential for exploratory data analysis and presenting findings effectively.

Nowadays R is mostly used by:

- Data scientists

- Data analysts

- Statistical engineers

Rust

One of Rust's most celebrated features is its strict memory safety guarantees. It ensures that programs are free from common memory errors such as null pointer dereferencing or buffer overflows. This is enforced at the compile time, which prevents many bugs before the program even runs.

Rust's excellent performance, great safety along with its active community and detailed documentation, makes it a fine choice for a wide range of applications. Whether you're developing high performance software, web apps or even command line tools, you can be assured that it provides all the features and tools needed to deliver good and efficient results. However, the learning curve is probably a little bit steeper compared to the previous two languages.

Performance comparison

Environment

- Python version: 3.8.10

- Pandas version: 1.4.3

- R version: 3.6.3

- Rust version: 1.62.0

- Ndarray version: 0.15

- Ndarray-stats version: 0.5.1

Note

Real time - overall time elapsed. Process time - the sum of the system and user CPU time of the current process.

Matrix multiplication

Matrix multiplication is an important tool that has numerous uses in many areas of statistics and machine learning. This experiment measures the speed of multiplying two 10000 long square matrices.

Python numpy

import numpy as np

import time

n = 10000

a = np.random.uniform(size=(n, n))

start, start_p = time.time(), time.process_time()

a.dot(a)

print(f"Real time: {time.time() - start} \nProcess time: {time.process_time() - start_p}")

- Real time: 13.34977388381958s

- Process time: 96.50351426700001s

The process time is significantly longer than real time taken, as numpy, and the OpenBLAS linear algebra library it uses, make use of multithreading to compute on multiple CPU cores concurrently.

R

n <- 10000

data = matrix(rexp(n*n, rate=.1), ncol=n)

start_p.time <- proc.time()

data %*% data

t = proc.time() - start_p.time

writeLines(sprintf("Real time: %f \nProcess time: %f", t["elapsed"], t["user.self"]))

- Real time: 23.890000s

- Process time: 96.279000s

The process time appears comparable to that of Python, but for unclear reasons, the real time taken is about twice as long.

Rust ndarray

use std::time::Instant;

use ndarray::prelude::*;

use cpu_time::ProcessTime;

fn main() {

const N: usize = 10000;

let mut a = Array::zeros(N * N)

.mapv_into(|_| rand::random::())

.into_shape((N, N))

.unwrap();

let (start, start_p) = (Instant::now(), ProcessTime::try_now().unwrap());

a.dot(&a);

println!("Real time: {:?} \nProcess time: {:?}", start.elapsed(), start_p.elapsed());

}

Ensuring the code runs in the release build, or with increased compiler optimizations, can make a significant performance difference.

Default implementation:

- Real time: 48.494994136s

- Process time: 48.358398508s

The default ndarrays matrix multiplication implementation takes roughly half the process time of numpy, but does not utilize multithreading, causing the real time performance to be significantly slower on CPU with multiple cores. This can be reprimanded by enabling the BLAS feature on ndarray, causing it to use a different linear algebra backend.

For the next test, the OpenBLAS backend (same as numpy) was used:

- Real time: 11.075091172s

- Process time: 86.823806584s

The resulting performance is nearly identical to that of numpy.

Pearson correlation matrix

The Pearson correlation coefficient is a simple method of measuring the linear correlation between two sets of data.

ρX,Y = cov(X,Y) / σXσY

Python

import numpy as np

import pandas as pd

import time

N = 2000 # Number of samples

M = 1000 # Observations per sample

df = pd.DataFrame(np.random.uniform(size=(M, N)))

start, start_p = time.time(), time.process_time()

corr = df.corr()

print(f"Real time: {time.time() - start} \nProcess time: {time.process_time() - start_p}")

In Python, the correlation was performed using the popular data analysis library "pandas".

- Real time: 7.853463649749756s

- Process time: 7.847832941600001s

The real time and process time are very similar, meaning that the calculations do not make extensive use of multithreading.

R

N <- 2000

M <- 1000

data = matrix(runif(N*M), ncol=N)

start.time <- proc.time()

cor <- cor(data, use = "pairwise.complete.obs")

t = Sys.time() - start.time

writeLines(sprintf("Real time: %f \nProcess time: %f", t["elapsed"], t["user.self"]))

In R, the correlation was performed using the cor function in the base stats library.

- Real time: 7.982700s

- Process time: 7.983400s

The result is very similar to the one achieved by Python.

When the pairwise.complete.obs argument is removed, a NaN value in the time series will cause all correlations

of its sample to equal NaN, but it will improve the computation time:

- Real time: 2.452200s

- Process time: 2.448600s

Rust

use ndarray::prelude::*;

use cpu_time::ProcessTime;

fn main() {

const N: usize = 2000;

const M: usize = 1000;

let mut a = Array::zeros(N * M)

.mapv_into(|_| rand::random::())

.into_shape((N, M)).unwrap();

let (start, start_p) = (Instant::now(), ProcessTime::try_now().unwrap());

let corr = a.pearson_correlation().unwrap();

println!("Real time: {:?} \nProcess time: {:?}", start.elapsed(), start_p.elapsed());

}

In Rust, the correlation was performed using the pearson_correlation function in the base ndarray_stats library.

The pearson_correlation function does not perform NaN exclusion.

The code should be run in the release build, or with a high compiler optimisation level to ensure optimal performance.

Default backend:

- Real time: 179.782593ms ~ 0.179782593s

- Process time: 179.65344ms ~ 0.17965344s

OpenBLAS backend:

- Real time: 197.422685ms ~ 0.197422685s

- Process time: 635.050419ms ~ 0.635050419s

Interestingly in this case the BLAS backend performs slightly worse than the default ndarray backend.

This could be explained by the pearson_correlation function being optimized for the default implementation

and not making effective use of the features BLAS provides.

So which one to choose?

Rust is clearly outperforming Python and R when it comes to performance and efficiency. Nevertheless the immaturity of the community-developed libraries along with its steep learning curve makes the decision of using Rust a less obvious choice. That being said, for projects where performance and safety are key, we would still consider Rust on a very short list of viable choices. The fun to code in Rust is an extra bonus!

If you need help choosing appropriate tools for your project, don't be scared to contact us!

Note

Please keep in mind that the results above can differ if you run the code locally. You can give it a try!

References

https://www.techspot.com/news/97654-how-broken-elevator-led-one-most-loved-programming.html